Stuff I Learned - 2nd Semester, Week #2

This past week has been a blur of neuroscience. Sometimes a good blur, and sometimes the blur that has the soundtrack of what???

In my Systems Neuroscience (Systems) course, this past week's lectures have been focused on audition. While audition may sound like a subject within the Theater Department, the Systems class has been focused on the sense of hearing version of audition.

Sound refers to the vibrations that occur in the air. These vibrations surround our bodies, and we have special sensors that allow us to perceive and interpret some of these vibrations.

The vibrations that fall within a specific range (~20 to 20,000 vibrations/second) are what we call audible. Within these audible vibrations is a lot of information that can be useful; the sound of a familiar voice, a warning siren, locating the position of the chirping bird, etc. Our sense of hearing allows us to decode these vibrations into information that can help us relate to our world.

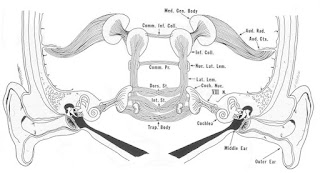

The process of decoding sound involves structures in the ear, its associated nerves, the brain stem and the brain. As the diagram indicates, the interconnected and crossing circuitry can initially look like a big bowl of spaghetti!

While the structures are complicated, there is a spare elegance that underlies the apparent randomness. To best perceive our surroundings, it's helpful to know the sound's intensity (loudness), its frequency (boombastic bass or singing highs) and where it came from (directionality). The criss-crossing of circuits is largely about determining these factors in the fastest and most efficient way possible.

While all the various structures and features of the auditory system go beyond even a graduate level neuroscience course, how about if I pick one of the previously mentioned properties of hearing to explore more deeply?

Locating sound, for example, is dependent on the sound, itself. That is, not all sounds are located in the same way. Lower frequency sounds (from vocals down to bomb-the-bass territory) are located by calculating the tiny time lag from when a sound first arrives at one ear before it arrives at the other ear. These are tiny time lags, though your nervous system can perceive them. And by knowing these tiny time lags and the approximate speed of sound, your brain can locate with a high degree of accuracy the source of a lower-frequency sound.

If lower-frequency sounds are located by their time lag (Interaural Time Delay, or ITD), then what about higher frequency sounds like a flute or a chirping bird? These higher frequency sounds are located by ILD, or their Interaural Level Difference. Sounds are louder in the ear that's closest to the sound than in the ear that's farther away from the sound. This almost imperceptible level difference is just enough information for your brain to accurately calculate where higher frequency sound is coming from.

Special geeky overload:

What determines this crossover from ITD to ILD?

The difference in location strategies is related to the size of your head! Since the wavelength of sound is related to its pitch, a relationship exists between the way that sound passes by your head and its pitch. Wavelengths that are bigger than your head (~15cm) correlate to sounds around the range of human speech and below. Conversely, wavelengths that are smaller than your head correspond to higher pitched sounds.

Sounds that have waves that are bigger than your head tend to pass around the skull without losing much power (i.e. - very small ILD and greater ITD). Sounds that have smaller waves tend to be blocked by the head, and the ILD becomes more pronounced, while the ITD becomes less pronounced. As a result of these skull-dimension based differences, the mechanisms for locating sound varies as pitch varies.

OK - geeky overload complete

I'd best get back to studying, now. Systems class has moved from audition to vestibulation, which I'm finding to be every bit as interesting. The vestibular sense is interconnected with the other sensorimotor systems, and I'm eager to learn more.

Thanks for reading, and have a great week!

|

| The auditory system is a marvel. |

Sound refers to the vibrations that occur in the air. These vibrations surround our bodies, and we have special sensors that allow us to perceive and interpret some of these vibrations.

The vibrations that fall within a specific range (~20 to 20,000 vibrations/second) are what we call audible. Within these audible vibrations is a lot of information that can be useful; the sound of a familiar voice, a warning siren, locating the position of the chirping bird, etc. Our sense of hearing allows us to decode these vibrations into information that can help us relate to our world.

The process of decoding sound involves structures in the ear, its associated nerves, the brain stem and the brain. As the diagram indicates, the interconnected and crossing circuitry can initially look like a big bowl of spaghetti!

While the structures are complicated, there is a spare elegance that underlies the apparent randomness. To best perceive our surroundings, it's helpful to know the sound's intensity (loudness), its frequency (boombastic bass or singing highs) and where it came from (directionality). The criss-crossing of circuits is largely about determining these factors in the fastest and most efficient way possible.

While all the various structures and features of the auditory system go beyond even a graduate level neuroscience course, how about if I pick one of the previously mentioned properties of hearing to explore more deeply?

Locating sound, for example, is dependent on the sound, itself. That is, not all sounds are located in the same way. Lower frequency sounds (from vocals down to bomb-the-bass territory) are located by calculating the tiny time lag from when a sound first arrives at one ear before it arrives at the other ear. These are tiny time lags, though your nervous system can perceive them. And by knowing these tiny time lags and the approximate speed of sound, your brain can locate with a high degree of accuracy the source of a lower-frequency sound.

If lower-frequency sounds are located by their time lag (Interaural Time Delay, or ITD), then what about higher frequency sounds like a flute or a chirping bird? These higher frequency sounds are located by ILD, or their Interaural Level Difference. Sounds are louder in the ear that's closest to the sound than in the ear that's farther away from the sound. This almost imperceptible level difference is just enough information for your brain to accurately calculate where higher frequency sound is coming from.

Special geeky overload:

What determines this crossover from ITD to ILD?

The difference in location strategies is related to the size of your head! Since the wavelength of sound is related to its pitch, a relationship exists between the way that sound passes by your head and its pitch. Wavelengths that are bigger than your head (~15cm) correlate to sounds around the range of human speech and below. Conversely, wavelengths that are smaller than your head correspond to higher pitched sounds.

Sounds that have waves that are bigger than your head tend to pass around the skull without losing much power (i.e. - very small ILD and greater ITD). Sounds that have smaller waves tend to be blocked by the head, and the ILD becomes more pronounced, while the ITD becomes less pronounced. As a result of these skull-dimension based differences, the mechanisms for locating sound varies as pitch varies.

OK - geeky overload complete

I'd best get back to studying, now. Systems class has moved from audition to vestibulation, which I'm finding to be every bit as interesting. The vestibular sense is interconnected with the other sensorimotor systems, and I'm eager to learn more.

Thanks for reading, and have a great week!

Comments